Basic Supervised

- Linear model + Square Loss -> Least Squares Estimator,

from Kutty slides or link

- Least Square Estimator + L2 regularization -> Ridge Regression \(\theta(\alpha)=\left(X^{T} X+\alpha I\right)^{-1} X^{T} y\)

- least square estimator + L1 regularization -> Lasso

讲了RidgeRegression的演进, 文中关于适定问题和不适定问题的关系,在ridge regression的应用有点意思。 其中full-rank 是well-posed, not full-rank === columns linear corelation, $X^T X$ determinent -> 0, 等应用的结论自己还不清楚,

随着的增大,各元素的绝对值均趋于不断变小,它们相对于正确值的偏差也越来越大。趋于无穷大时,趋于0。其中,随的改变而变化的轨迹,就称为岭迹。实际计算中可选非常多的 值,做出一个岭迹图,看看这个图在取哪个值的时候变稳定了,那就确定值了。 岭回归是对最小二乘回归的一种补充,它损失了无偏性,来换取高的数值稳定性,从而得到较高的计算精度。 ———————————————— 版权声明:本文为CSDN博主「mensyne」的原创文章,遵循 CC 4.0 BY-SA 版权协议,转载请附上原文出处链接及本声明。 原文链接:https://blog.csdn.net/xgxyxs/article/details/79436195

well-posed problem (适定问题, a solution exists, the solution is unique, the solution’s behavior changes continuously with the initial conditions.如果某一个问题是适定的,它就有机会在使用了稳定演算法的电脑上取得解。如果问题是不适定的,就需要为数值处理重新以公式表示。这通常包含了额外的假设,例如:解的平滑性。这个过程称为正则化(Regularization)。)

Why do we want to contain two component in the objective?:

- Optimizing training loss encourages predictive models: Fitting well in training data at least get you close to training data which is hopefully close to the underlying distribution

- Optimizing regularization encourages simple models: Simpler models tends to have smaller variance in future predictions, making prediction stable Cite from ctq学长的slides

Decision Tree:

$x_i$ -> internal node tests an attritude/feature assign a value $x_i = v$, to each branch each leaf assigns a a class $ \hat{y}_{i} $

Goal: find the smallest decision tree that minimizes error. 训练的目的就是学习上述三个值。

Build Tree(DS):

if y_i = y for all examples in DS:

return y

elif x_i = x for all examples in DS:

return majority label

// 上述两个条件挺是分裂的点

else:

x_s = argmin_x H(y|x)

for each value v of x_s:

DS_v = {example in DS where x_s = v} // 按照x值进行数据分类

Build Tree(DS_v)

决策树是可以对应的到决策边界的 Avoid Overfitting:

- Set max depth.

- 节点里面样本数量小于某个值就停止

- 这两个值都是CV得到的。

- validation data 一旦worse就停止。

##Emsemble 目的: decrease variance without increasing bias. idea: average multiple models to reduce variance. 选取不同的数据集:

- 数据直接切割[不可行]

- bootstrap.经过数学分析-> 可行

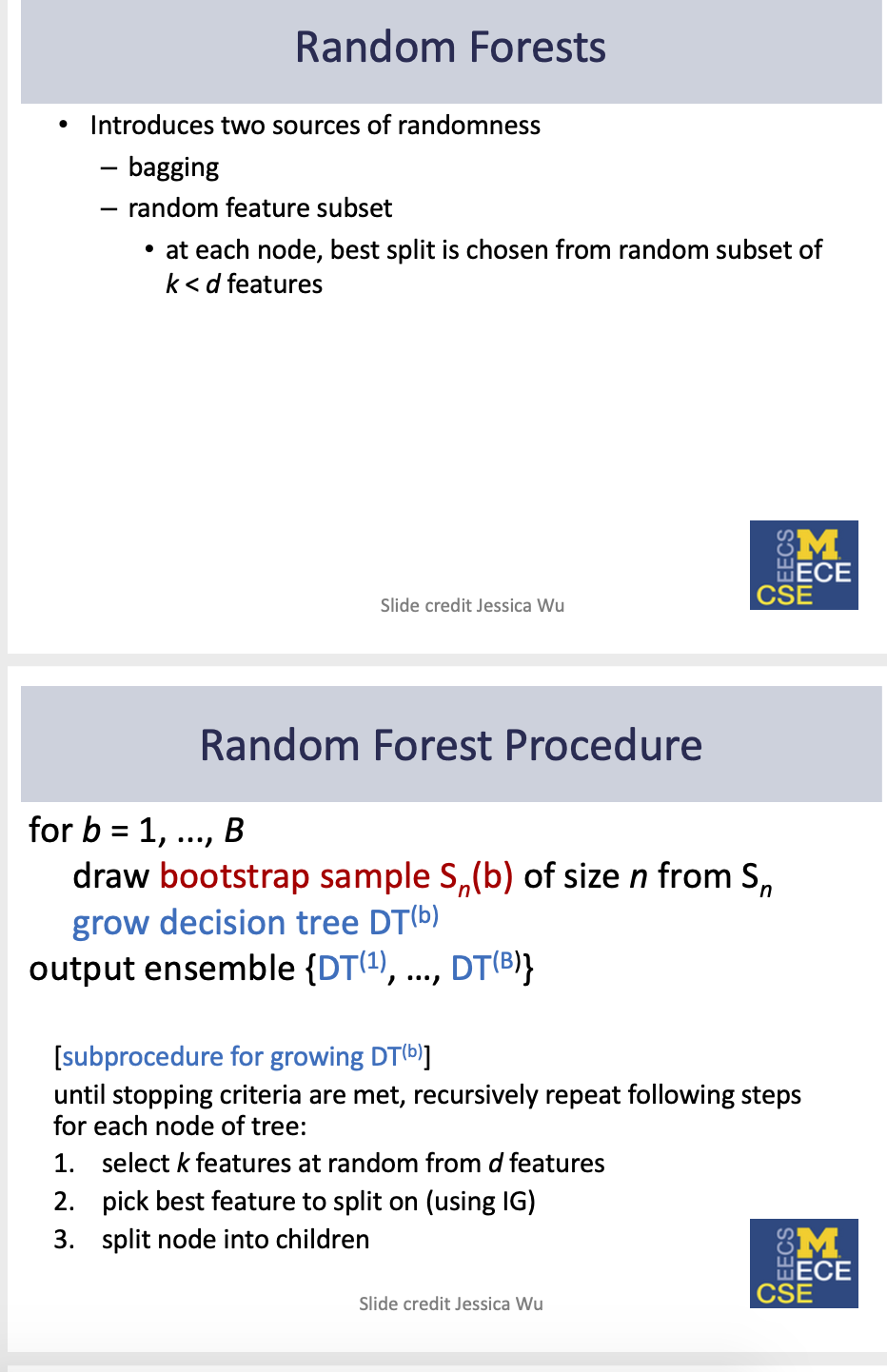

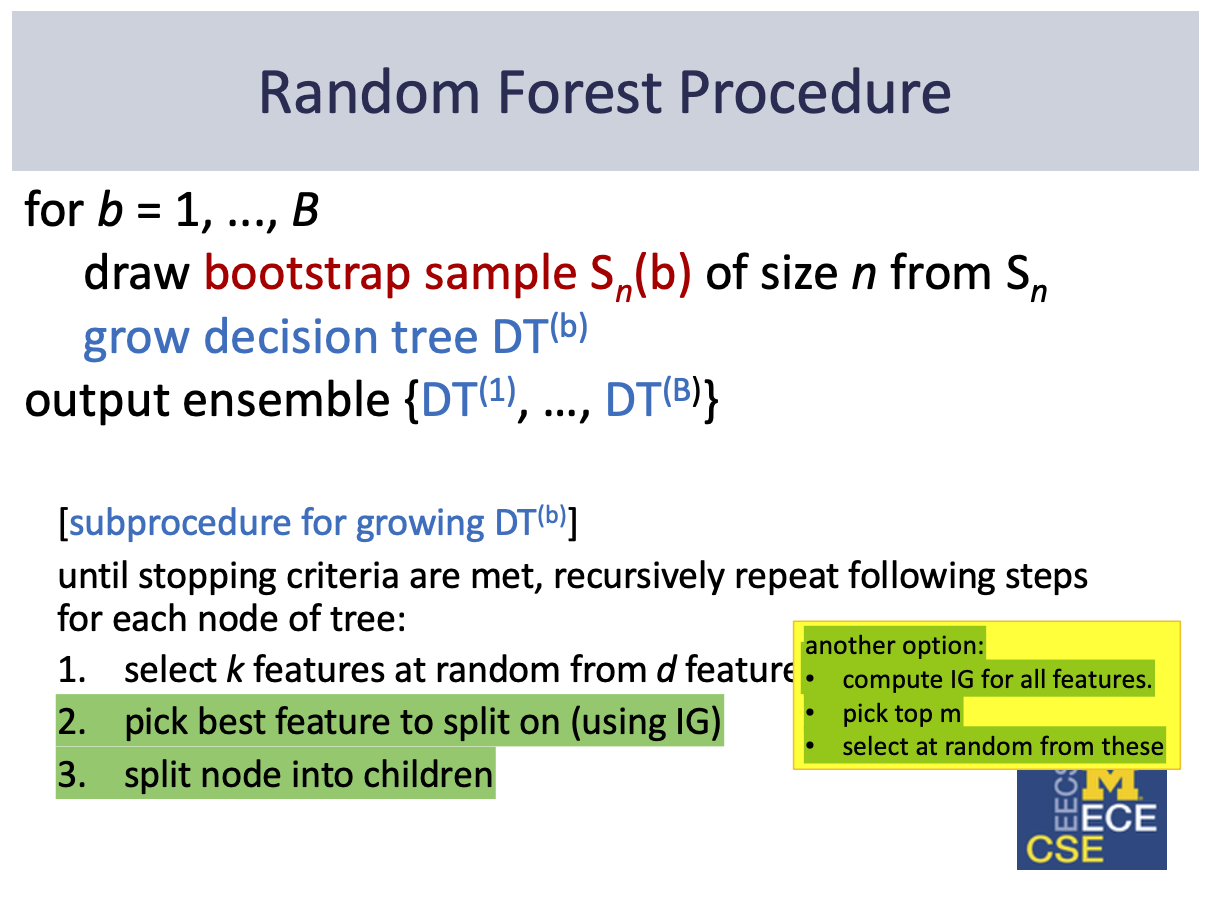

Random Forest:

From Kutty Slides, Lec10, “Random Forest”